- Key Takeaways

- How LLMs Impression Native Search

- How LLMs Course of Native Intent

- Why Native search engine optimisation Nonetheless Issues in an AI-Pushed World of Search

- Greatest Practices for Localized search engine optimisation for LLMs

- Give attention to Particular Viewers Wants For Your Goal Areas

- Phrase and Construction Content material In Methods Simple For LLMs to Parse

- Emphasize Your Localized E-E-A-T Markers

- Use Entity-Based mostly Markup

- Unfold and Standardize Your Model Presence On-line

- Use Localized Content material Types Like Comparability Guides and FAQs

- Inner Linking Nonetheless Issues

- Monitoring Ends in the LLM Period

- FAQs

- What’s native search engine optimisation for LLMs?

- How do I optimize my listings for AI-generated outcomes?

- What alerts do LLMs use to find out native relevance?

- Do evaluations influence LLM-driven searches?

- Conclusion

Giant language fashions (LLMs) like ChatGPT, Perplexity, and Google’s AI Overviews are altering how folks discover native companies. These programs don’t simply crawl your web site the best way search engines like google do. They interpret language, infer which means, and piece collectively your model’s id throughout the whole internet. In case your native visibility feels unstable, this shift is without doubt one of the largest causes.

Conventional local SEO like Google Enterprise Profile optimization, NAP consistency, and overview era nonetheless matter. However now you’re additionally optimizing for fashions that want higher context and extra structured info. If these parts aren’t in place, you fade from LLM-generated solutions even when your rankings look fantastic. If you’re specializing in a smaller native viewers, it’s important that you understand what you must do.

Key Takeaways

- LLMs reshape how native outcomes seem by pulling from entities, schema, and high-trust alerts, not simply rankings.

- Constant info throughout the online provides AI fashions confidence when selecting which companies to incorporate of their solutions.

- Evaluations, citations, structured knowledge, and natural-language content material assist LLMs perceive what you do and who you serve.

- Conventional native search engine optimisation nonetheless drives visibility, however AI requires deeper readability and stronger contextual alerts.

- Enhancing your entity energy helps you seem extra usually in each natural search and AI-generated summaries.

How LLMs Impression Native Search

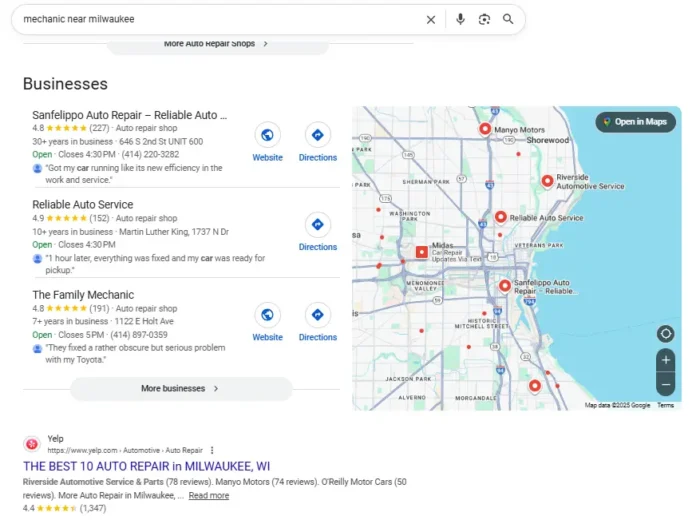

Conventional native search outcomes current choices: maps, listings, and natural rankings.

LLMs don’t merely record decisions. They generate a solution based mostly on the clearest, strongest alerts out there. If your enterprise isn’t sending these alerts persistently, you don’t get included.

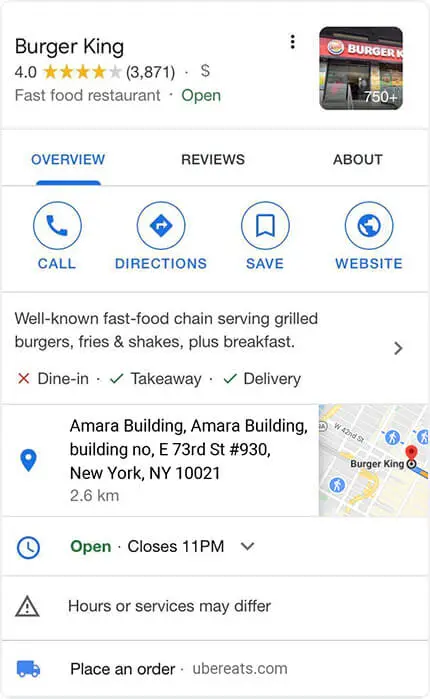

If your enterprise info is inconsistent and your content material is imprecise, the mannequin is much less prone to confidently affiliate you with a given search. That hurts visibility, even when your conventional rankings haven’t modified. As you’ll be able to see above, these LLM responses are the very first thing that somebody can see in Google, not an natural itemizing. This doesn’t even account for the rising variety of customers turning to LLMs like ChatGPT on to reply their queries, by no means utilizing Google in any respect.

How LLMs Course of Native Intent

LLMs don’t use the identical proximity-driven weighting as Google’s native algorithm. They infer native relevance from patterns in language and structured alerts.

They search for:

- Evaluations that point out service areas, neighborhoods, and workers names

- Schema markup that defines your enterprise sort and placement

- Native mentions throughout directories, social platforms, and information websites

- Content material that addresses questions in a city-specific or neighborhood-specific method

If prospects point out that you just serve a particular district, area, or neighborhood, LLMs soak up that. In case your structured knowledge consists of service areas or particular location attributes, LLMs issue that in. In case your content material references native issues or situations tied to your discipline, LLMs use these cues to grasp the place you match.

That is vital as a result of LLMs don’t use GPS or IP tackle on the time of search like Google does. They’re reliant on express mentions and pull conversational context, IP-derived from the app to get a basic thought, so it’s not as proximity-exact related to the searcher.

These programs deal with structured knowledge as a supply of fact. When it’s lacking or incomplete, the mannequin fills the gaps and sometimes chooses rivals with stronger alerts.

Why Native search engine optimisation Nonetheless Issues in an AI-Pushed World of Search

Native search engine optimisation continues to be foundational. LLMs nonetheless want knowledge from Google Business Profiles, evaluations, NAP citations, and on-site content material to grasp your enterprise.

These parts provide the contextual basis that AI depends on.

The most important distinction is the extent of consistency required. If your enterprise description modifications throughout platforms or your NAP particulars don’t match, AI fashions sense uncertainty. And uncertainty retains you out of high-value generative solutions. If a consumer has a extra particular branded question for you in an LLM, an absence of element might imply outdated/incorrect data is offered about your enterprise.

Native search engine optimisation provides you construction and stability. AI provides you new visibility alternatives. Each matter now, and each enhance one another when carried out proper.

Greatest Practices for Localized search engine optimisation for LLMs

To strengthen your visibility in each search engines like google and AI-generated outcomes, your technique has to assist readability, context, and entity-level consistency. These finest practices assist LLMs perceive who you might be and the place you belong in native conversations.

Give attention to Particular Viewers Wants For Your Goal Areas

Generic native pages aren’t as efficient as they was once. LLMs choose companies that display actual understanding of the communities they serve.

Write content material that displays:

- Neighborhood-specific points

- Native local weather or seasonal challenges

- Rules or processes distinctive to your area

- Cultural or demographic particulars

In the event you’re a roofing firm in Phoenix, speak about excessive warmth and tile-roof restore. In the event you’re a dentist in Chicago, reference neighborhood landmarks and customary questions sufferers in that space ask.

The extra native and grounded your content material feels, the simpler it’s for AI fashions to match your enterprise to actual native intent.

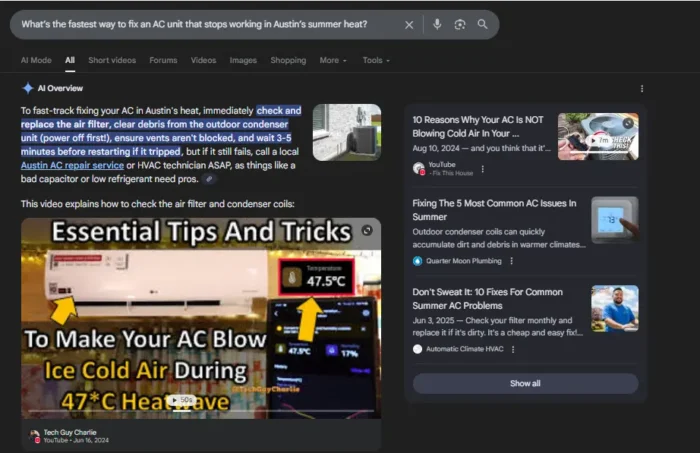

Phrase and Construction Content material In Methods Simple For LLMs to Parse

LLMs work finest with content material that’s structured clearly. That features:

- Simple headers

- Brief sections

- Pure-language FAQs

- Sentences that mirror how folks ask questions

Customers sort full questions, so reply full questions.

As an alternative of writing “Austin HVAC companies,” tackle:

“What’s the quickest approach to repair an AC unit that stops working in Austin’s summer season warmth?”

LLMs perceive and reuse content material that leans into conversational patterns. The extra your construction helps extraction, the extra possible the mannequin is to incorporate your enterprise in summaries.

Emphasize Your Localized E-E-A-T Markers

LLMs consider credibility by way of expertise, experience, authority, and belief alerts, simply as people do.

Strengthen your E-E-A-T by way of:

- Case particulars tied to actual neighborhoods

- Skilled commentary from workforce members

- Creator bios that mirror credentials

- Group involvement or partnerships

- Evaluations that talk to particular outcomes

LLMs deal with these particulars as proof you understand what you’re speaking about. After they seem persistently throughout your internet presence, your enterprise feels extra reliable to AI and extra prone to be advisable.

Use Entity-Based mostly Markup

Schema markup is without doubt one of the clearest methods to speak your id to AI. LocalBusiness schema, service space definitions, division buildings, services or products attributes—all of it helps LLMs acknowledge your entity as distinct and bonafide.

The extra full your markup is, the stronger your entity turns into. And robust entities present up extra usually in AI solutions.

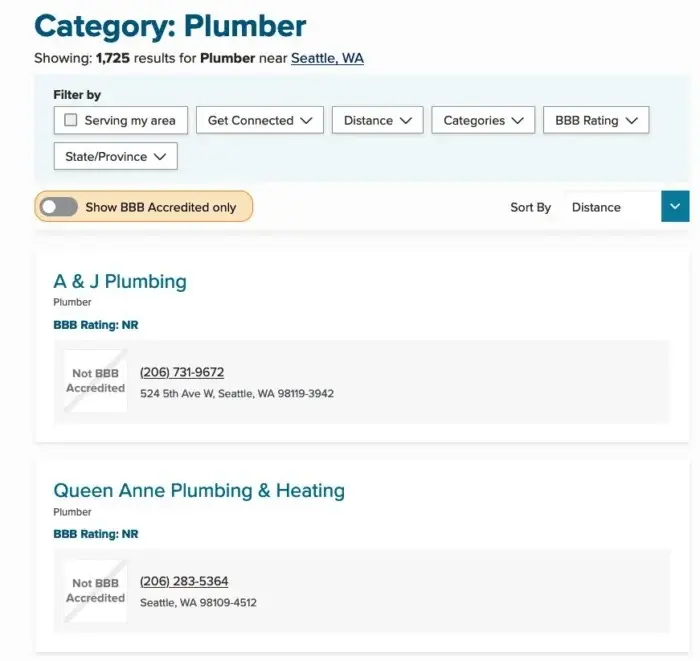

Unfold and Standardize Your Model Presence On-line

LLMs analyze your complete digital footprint, not simply your web site. They evaluate how persistently your model seems throughout:

- Social platforms

- Business directories

- Native organizations

- Evaluation websites

- Information or group publications

In case your identify, tackle, cellphone quantity, hours, or enterprise description differ between platforms, AI detects inconsistency and turns into much less assured referencing you. It’s additionally vital to ensure extra subjective components like your model voice and worth propositions are additionally constant throughout all these completely different platforms.

One factor that you could be not concentrate on is that ChatGPT makes use of Bing’s index, so Bing Locations is one space to prioritize constructing your presence. Whereas it’s not essentially going to reflect how Bing will show within the search engine, it makes use of the information. Issues like Apple Maps, Google Mps, and Waze are additionally priorities to get your NAP data.

Standardization builds authority. Authority will increase visibility.

Use Localized Content material Types Like Comparability Guides and FAQs

LLMs excel at deciphering content material codecs that break advanced concepts into digestible items.

Comparability guides, price breakdowns, neighborhood-specific FAQs, and troubleshooting explainers all translate extraordinarily nicely into AI-generated solutions. These codecs assist the mannequin perceive your enterprise with precision.

In case your content material mirrors the construction of how folks search, AI can extra simply extract, reuse, and reference your insights.

Inner Linking Nonetheless Issues

Inner linking builds readability, one thing AI is dependent upon. It reveals which ideas relate to one another and which matters matter most.

Join:

- Service pages to associated location pages

- Weblog posts to the companies they assist

- Native FAQs to broader class content material

Robust inside linking helps LLMs comply with the trail of your experience and perceive your authority in context.

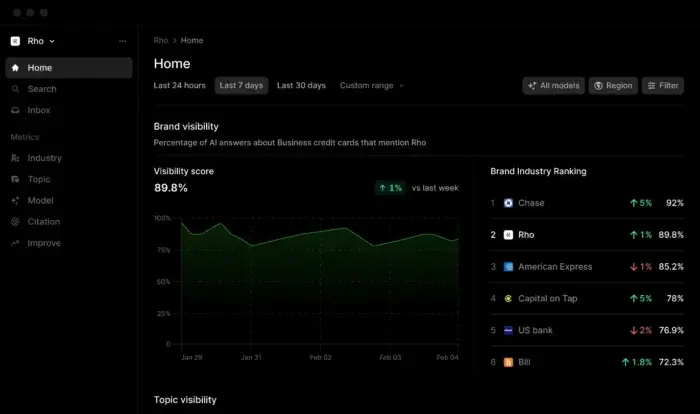

Monitoring Ends in the LLM Period

Rankings matter, however they not inform the total story. To grasp your AI visibility, monitor:

- Branded search development

- Google Search Console impressions

- Referral site visitors from AI instruments

- Will increase in unlinked model mentions

- Evaluation quantity and overview language tendencies

That is simpler with the appearance of devoted AI visibility tools like Profound.

The purpose right here is to have a way to disclose whether or not LLMs are pulling your enterprise into their summaries, even when clicks don’t happen.

As zero-click outcomes develop, these new metrics turn into important.

FAQs

What’s native search engine optimisation for LLMs?

It’s the method of optimizing your enterprise so LLMs can acknowledge and floor you for native queries.

How do I optimize my listings for AI-generated outcomes?

Begin with correct NAP knowledge, robust schema, and content material written in pure language that displays how locals ask questions.

What alerts do LLMs use to find out native relevance?

Entities, schema markup, citations, overview language, and contextual alerts corresponding to landmarks or neighborhoods.

Do evaluations influence LLM-driven searches?

Sure. The language inside evaluations helps AI perceive your companies and your location.

{

“@context”: “https://schema.org”,

“@sort”: “FAQPage”,

“mainEntity”: [

{

“@type”: “Question”,

“name”: “What is local SEO for LLMs?”,

“acceptedAnswer”: {

“@type”: “Answer”,

“text”: “

It’s the process of optimizing your business so LLMs can recognize and surface you for local queries.

”

}

}

, {

“@type”: “Question”,

“name”: “How do I optimize my listings for AI-generated results?”,

“acceptedAnswer”: {

“@type”: “Answer”,

“text”: “

Start with accurate NAP data, strong schema, and content written in natural language that reflects how locals ask questions.

”

}

}

, {

“@type”: “Question”,

“name”: “What signals do LLMs use to determine local relevance?”,

“acceptedAnswer”: {

“@type”: “Answer”,

“text”: “

Entities, schema markup, citations, review language, and contextual signals such as landmarks or neighborhoods.

”

}

}

, {

“@type”: “Question”,

“name”: “Do reviews impact LLM-driven searches?”,

“acceptedAnswer”: {

“@type”: “Answer”,

“text”: “

Yes. The language inside reviews helps AI understand your services and your location.

”

}

}

]

}

Conclusion

LLMs are rewriting the principles of native discovery, however robust native search engine optimisation nonetheless provides the alerts these fashions rely upon. When your entity is evident, your citations are consistent, and your content material displays the actual wants of your group, AI programs can perceive your enterprise with confidence.

These similar ideas sit on the core of each efficient LLM SEO and trendy native search engine optimisation technique. If you strengthen your entity, refine your citations, and create content material grounded in actual native intent, you enhance your visibility in every single place—natural rankings, map outcomes, and AI-generated solutions alike.